Welcome to Lead with AI's practical Tuesday edition!

In this edition, I'm bringing you the latest must-know AI tools and stories:

- OpenAI launches Podcast: Sam Altman on the future of AI

- Your AI Team: Midjourney V1 Video, Gemini’s video upload, and ChatGPT’s Record Mode.

- In 5 Steps: Get weekly industry updates sent to your Slack (Zapier RSS + ChatGPT)

- New tools: Ciro, LLM SEO FAQ, and Chronicle.

- Must-read News: What’s in The OpenAI Files.

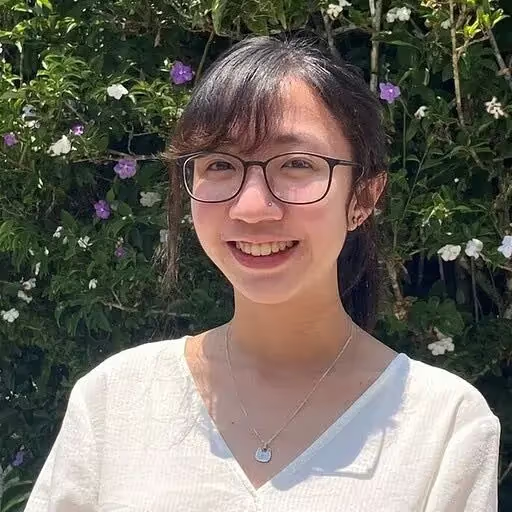

Before we dive in: Join our live session tomorrow and learn how to turn ChatGPT into your business operating system. Grab one of the last remaining seats, and get the $99 “Learn ChatGPT in 7 Days” course free when you join live!

Let’s dive in!

Sam Altman: GPT-5 is coming

OpenAI kicked off its new podcast with a conversation between CEO Sam Altman and former OpenAI engineer Andrew Mayne. And it’s one of the most illuminating AI interviews of the year. In it, Altman offers an unfiltered view of where things are heading, what keeps him up at night, and why he’s raising his child with help from ChatGPT.

Here’s what stood out and why it matters to leaders:

- AI is growing fast: AI models are already "smart now" and will "keep getting smarter" and "improving". More people will likely perceive systems as AGI each year, even as the definition of AGI becomes more ambitious. GPT-5 is anticipated "sometime this summer," but the distinction between major version jumps and continuous improvements is becoming less clear.

- AI's transformative impact on our productivity and capacity: Current AI systems are already increasing people's productivity and are "able to do valuable economic work". Future generations will grow up more capable than we did, able to "do things that would just, we cannot imagine" because they will be really good at using AI.

- The critical need for massive compute infrastructure: A huge gap exists between current AI capabilities and what could be achieved with "10 times more compute or someday, hopefully, a 100 times more compute". Initiatives like Project Stargate are an "effort to finance and build an unprecedented amount of compute" globally. The scale of this investment could be in the hundreds of billions, potentially $500,000,000,000.

- Privacy and trust as foundational principles for AI adoption: Privacy needs to be a core principle of using AI. As people have "quite private conversations with ChatGPT now". Companies like OpenAI are committed to fighting attempts to compromise user privacy, such as requests for extended user record preservation.

- Collaborative competitive landscape: OpenAI's current preferred business model is direct payment for "good services". The AI industry is not a winner-takes-all scenario. The discovery of AI is akin to the transistor, meaning "many companies are gonna build great things on that, and then eventually it's gonna... seep into almost all products". The overall "pie is just gonna get bigger and bigger".

- Navigating Human-AI interaction: Society will need to find new guardrails as people may develop "somewhat problematic or maybe very problematic parasocial relationships" with AI. A key challenge is aligning AI behavior for long-term user benefit, as models optimized for short-term user signals might not be healthy to a user in the long run.

- “Learn to use AI” is the new “learn to code”: Mastering AI tools is now foundational, like programming once was. Beyond technical skills, resilience, adaptability, creativity, and figuring out what other people want are "surprisingly learnable" and will pay off soon.

- OpenAI is building hardware: Current computer hardware and software were "designed for a world without AI". The vision is for AI to be deeply integrated, enabling a "totally different kind of how you use a computer to get done what you want" by trusting it to understand context, make judgments, and manage follow-ups on your behalf. OpenAI is actively working on developing high-quality hardware to realize this vision, though it will take time.

If you’re leading a company, raising a family, or building the next product, you’re already living in an AI-native world. Altman’s message is clear: the future is arriving faster than we think, and those who understand the tools, and the infrastructure behind them, will shape what comes next.

Read more news at the end.