In the 18 months since I started teaching AI to business leaders, the landscape has transformed dramatically, from tech hype to a strategic imperative top of mind for most leaders.

According to McKinsey, 75% of companies now use generative AI in at least one function, more than double versus last year.

In “No AI, No Job,” Danielle Abril of The Washington Post highlights a new wave of "AI-first" companies like Duolingo, Shopify, and Box.

Even Klarna, initially retreating slightly by rehiring human customer service reps, now generates an impressive $1 million per employee, largely due to strategic AI integration.

But for every Klarna or Duolingo, 99% of organizations remain stuck, still experimenting rather than truly scaling.

Why is full adoption so hard to come by? And how do companies move from AI-inspired to AI-first?

Successful AI transformation requires alignment between two critical forces: top-down strategic clarity and bottom-up practical experimentation, with a thriving connective layer that most companies overlook:

Top-Down: Strategic Clarity with Realism

While it’s the people actually using AI, a clear strategic direction from leadership is critical.

Executives must define exactly how AI supports their business strategy. Many companies start by committing to major platforms (ChatGPT, Copilot, Gemini), customizing and integrating them strategically for scale, security, and fit.

McKinsey names takers, shapers, and makers, but advises that most companies are best off customizing and integrating existing platforms for scalability, security, and strategic fit.

But, as BCG rightly warns, generative AI isn't just about upgrading technology:

“Companies have treated GenAI like a typical technology upgrade or a collection of pilots, with tech teams leading the way. While this is fine for the technology side of the equation, it fails to achieve real bottom-line impact,” writes BCG in a September 2024 report.

Take Microsoft Copilot as an example: it might seem a safe choice when your data already sits within Microsoft's ecosystem. But about those team members who feel ChatGPT or Claude vastly improves their capacity, capability, and quality of work?

Do you block these or reconsider your platform strategy? Or do you, like one of the companies we’re working with on their AI transformation, broaden your approach:

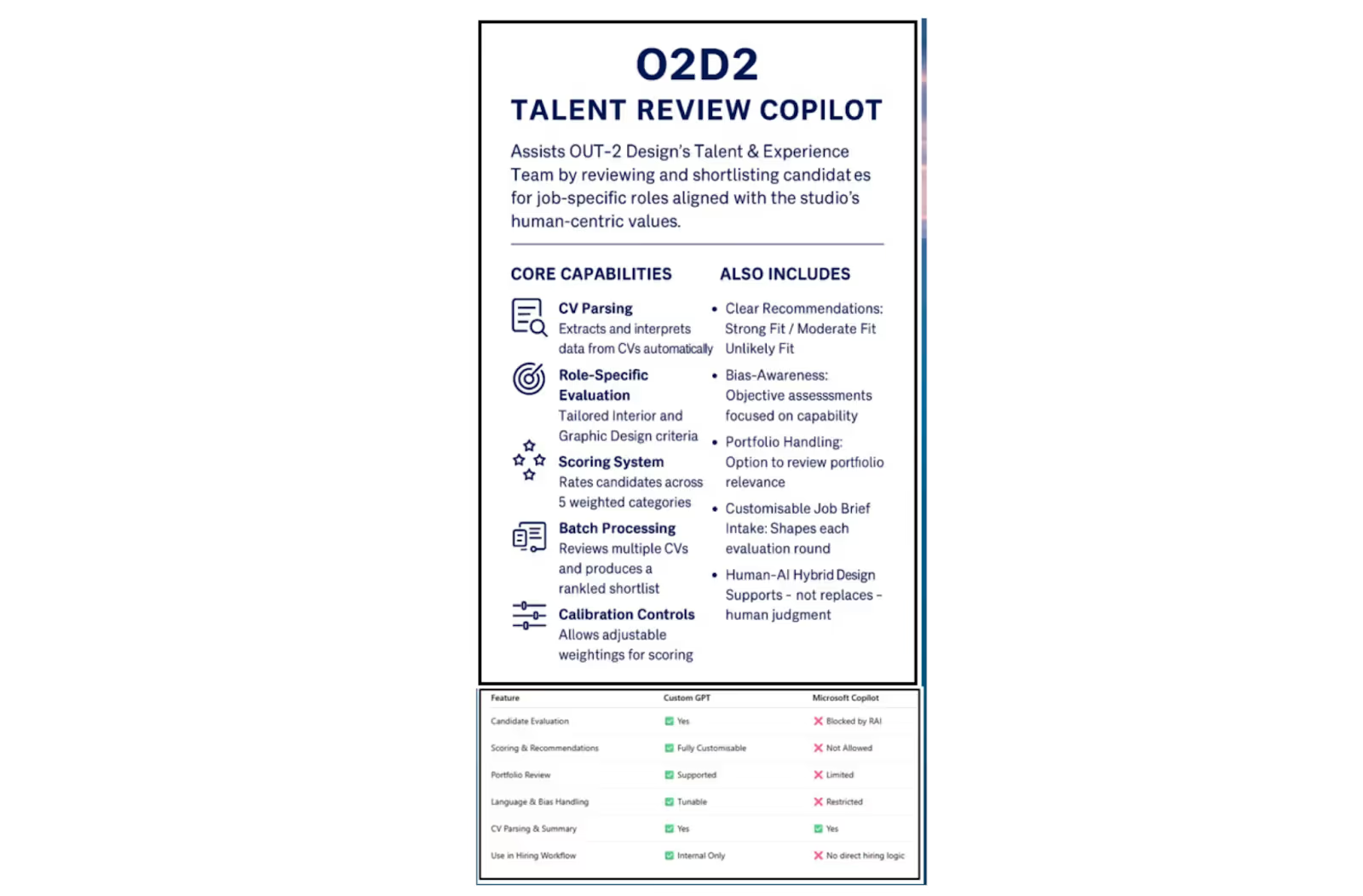

“Different tools bring different strengths, and using ChatGPT alongside Copilot allows us to match the right platform to the task, and even to cross-pollinate thinking between them. It’s less about choosing one over the other, and more about building a collaborative ecosystem of AI agents.” – Andrew Currie, CEO, OUT-2 Design Group.

Winning leaders actively shape expectations because AI initiatives need iteration, especially if it is Microsoft Copilot (our live sessions sometimes feel like therapy for its users, and I should probably dedicate an article to the challenges with it) as less than half of users adopt their company’s AI platform.

Visible, practical support from executives is equally crucial, as Debbie Lovich from BCG emphasized to me: “Don’t ask your employees to do anything that you wouldn’t do yourself.” And indeed, her research shows that teams with AI-engaged managers are 4x more likely to adopt AI tools.