OpenAI DevDay 2025: New Platforms & Tools, and How OpenAI Works With Its Own AI

Introduction

When Sam Altman walked on stage at DevDay 2025 in San Francisco, the air in the room felt different. The crowd was ready for the usual cascade of model updates and API launches. Instead, Altman opened with something larger: a vision of how AI is becoming part of everyday work.

He began with numbers that sounded almost unreal. OpenAI now has more than four million developers, 800 million weekly ChatGPT users, and six billion tokens processed every minute. But beyond the scale, the message was clear. AI is no longer a side project or an experiment sitting on the edge of an organization. It has moved to the center of how teams operate, decide, and deliver.

That idea came to life through what followed. For the first time, OpenAI revealed how it has been using its own technology internally across sales, HR, and support. It was not just a showcase of features. It was a playbook for how AI changes the shape of work itself.

Opening: From Platform to Practice

The keynote began like a product unveiling. Altman introduced a set of new pillars designed to make AI development faster and easier to integrate: the Apps SDK, AgentKit, and Codex.

The Apps SDK turns ChatGPT into a platform where apps and conversations blend into a single workflow. Pilot partners, including Booking.com, Canva, Coursera, Figma, Expedia, Spotify, and Zillow, are also available today in various markets. AgentKit provides developers with visual tools to build, deploy, and optimize agentic workflows, including Agent Builder, ChatKit, and Guardrails for safety. Codex, now powered by GPT-5, writes, refactors, and deploys code with a new kind of adaptive reasoning that grows more capable the longer it runs.

But behind those tools was something more important. OpenAI was showing how these systems make work itself more fluid. What used to take months of software development can now happen in minutes. What used to require a team can begin with an idea. The boundary between human intention and technical execution is shrinking.

In a shift noticed by Fast Company, OpenAI “talked less about AGI and more about making AI do real work that matters”. That focus on utility over novelty is what transforms DevDay 2025 from a product launch into a blueprint for how organizations adopt AI.

Yet it also revealed something larger about the market itself. With ChatGPT now serving as both platform and operating system, OpenAI effectively absorbed the “plumbing layer” of the AI startup ecosystem. The Apps SDK, AgentKit, and Codex, together cover almost everything early-stage companies once pitched as differentiation, workflow builders, chat interfaces, data connectors, evaluation ops, and even monetization through Instant Checkout.

That doesn’t kill innovation, but it does raise the bar for what “value-add” means. Startups now have to move up the stack, from infrastructure to domain intelligence and trust layers. The opportunity shifts from “building on GPT” to embedding inside real work, like patient care, compliance, sales enablement, or manufacturing ops.

As one founder joked after the keynote: “It’s not the end of AI startups, it’s the end of easy ones.”

OpenAI’s strategy mirrors earlier platform cycles. Just as browsers absorbed web navigation and smartphones absorbed app interfaces, ChatGPT is now becoming the browser of work, a conversational environment where apps, agents, and content coexist. Distribution is changing, and so is control: if you build inside ChatGPT, you can reach hundreds of millions instantly, but you live under OpenAI’s ecosystem rules.

Inside OpenAI: How AI Amplifies Performance, Organizes Knowledge, and Automates Learning

- Sales: Amplifying Expertise with the Go-to-Market Assistant

Sales at OpenAI is a fast-moving, high-pressure world. New features launch every few weeks, and every customer wants to understand what those updates mean for their business. By last year, the team was stretched thin. They needed a way to prepare faster, respond to technical questions, and maintain quality without burning out.

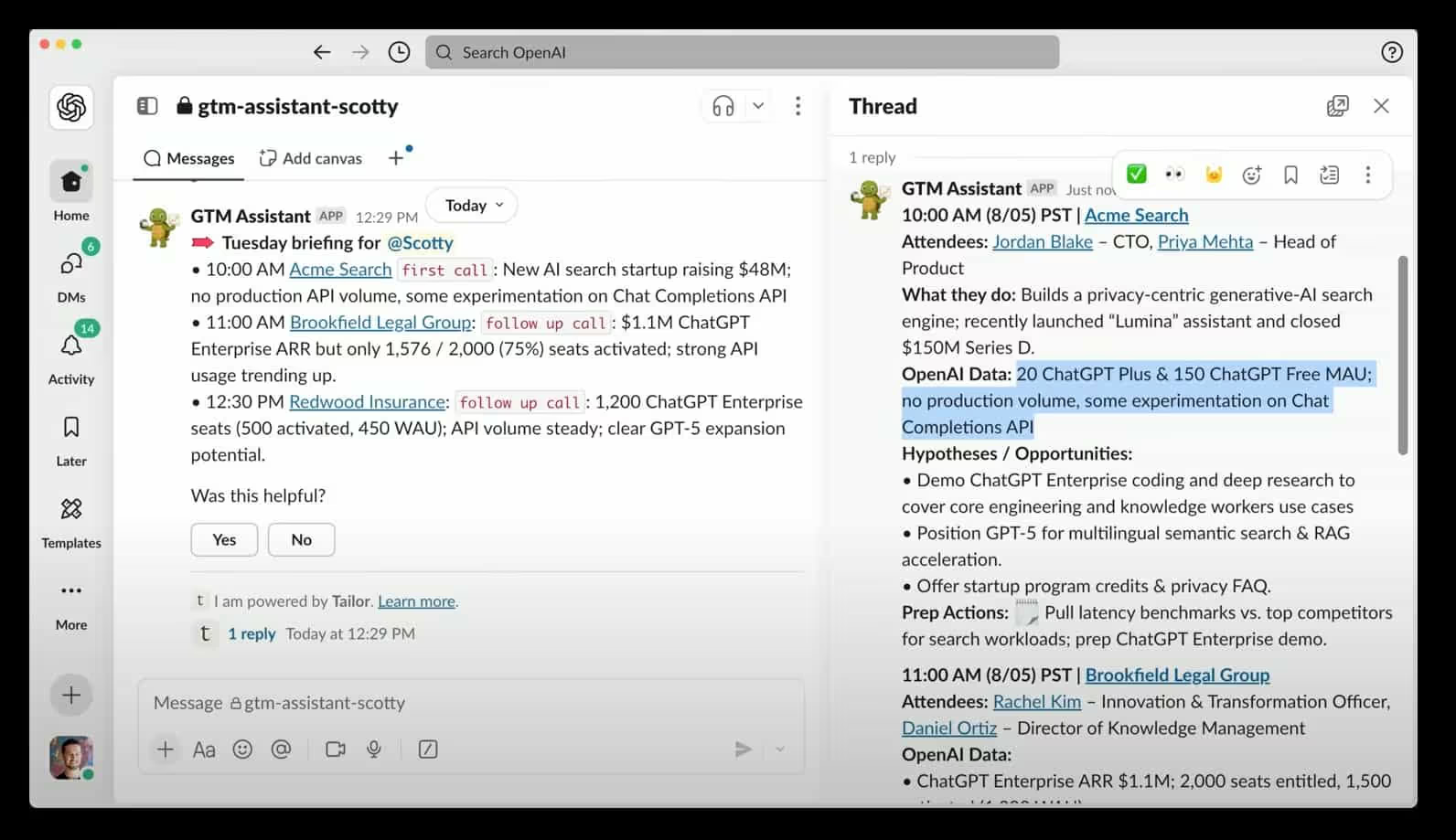

That is where the Go-to-Market Assistant began. Scotty, from the Go-to-Market Innovation Team, started by shadowing the company’s top performer, Sophie. She had a remarkable ability to research customers, design custom demos, and follow up with precision. Her workflow became the blueprint for a new AI system that could help every rep work the way Sophie did.

The team built the assistant around that model of excellence. Integrated into Slack and ChatGPT, it prepares daily briefings, compiles research, drafts follow-up notes, and even builds demo scripts using real customer data. Every interaction creates a feedback loop that improves the model.

Today, more than 400 salespeople use it weekly, exchanging an average of 20 messages each. On average, they save a full day of work. But the deeper change is cultural. Excellence is no longer a personal advantage. It has become something shared, something that scales.

- HR: Turning Information Chaos into Organizational Memory

OpenAI’s next challenge came from within. The company had grown across continents, and with that growth came complexity. Policies were changing, teams were forming and reforming, and new hires were drowning in a context they could not easily find.

The team built Open House, an internal AI assistant for people and culture. It connects Workday data, internal wikis, and communication channels into one intelligent interface. Employees can ask natural questions like “How do I access the New York office?” or “Who can help with customer demos?” The assistant answers instantly, linking to the right documents, people, and Slack threads.

It does more than answer questions. It connects people. When one employee planned a customer trip, Open House not only surfaced travel guidelines but also introduced a colleague nearby who had run a similar project. What once took days of back-and-forth messages now happens in a single conversation.

75% of employees use Open House every week. It has turned the company’s scattered knowledge into a living system, one that strengthens both efficiency and belonging.

- Support: Building a System That Learns From Every Interaction

Customer support at OpenAI operates at a massive scale, with millions of users and tickets each year. When new products like ImageGen launched, ticket volume spiked by several magnitudes. Traditional support systems could not keep up.

The team approached the problem differently. They studied thousands of customer interactions to define gold-standard responses and escalation rules. These became the foundation for a self-improving AI support system.

When a customer submits a ticket, the AI classifies it, finds the right context, and crafts a response. If it is confident, it replies automatically. If not, it escalates to a human, whose resolution then feeds back into the system. Each interaction becomes a new lesson.

The results have been remarkable. 70% of tickets are now resolved autonomously, a 30% improvement, with 80% rated highly positive by quality reviewers. The architecture is already expanding to real-time voice support.

Support used to be a cost center. Now it is an intelligence loop, one that improves with every customer it serves.

From OpenAI to the World: Lessons from Clay and Decagon

After showing how AI had reshaped its own operations, OpenAI turned the spotlight outward. In a later AMA session, the founders of Clay and Decagon shared how they help enterprises navigate the same transformation.

Their lessons echoed OpenAI’s internal experience:

- Treat AI deployments like product launches. Start small, involve humans early, and expand gradually as systems prove themselves.

- Prove ROI with clear data. Whether it is faster response times or higher satisfaction, measurable outcomes build confidence and justify investment.

- Build guardrails that empower users. Decagon uses Agent Operating Procedures (AOPs)—a framework that lets non-technical teams define their own rules and safeguards.

- Keep integrations flexible. Clay separates its integration layer from the core system so new models can be added without breaking workflows.

Both founders agreed: AI adoption is not about a single deployment, it is about a continuous rhythm of iteration, improvement, and trust-building. The companies that master that rhythm move faster and sustain the change.

The Bottom Line

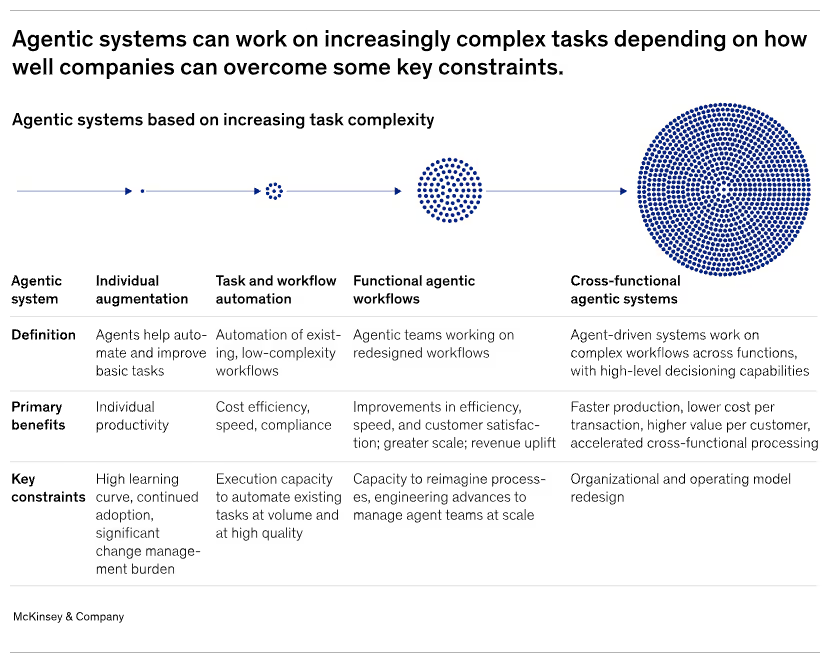

Across the keynote, the internal experiments, and the external insights, one truth stands out: AI adoption is not a technology project. It is an organizational redesign.

The question is shifting from “How do we automate tasks?” to “How do we amplify expertise?” When companies start with people and build from their best practices outward, AI becomes a multiplier of human talent, not a replacement for it.

Here is the practical path forward:

- Find your “Sophie.” Identify a top performer and map their workflow in detail.

- Embed AI inside familiar tools rather than creating a new system.

- Start small with clear metrics for success.

- Create continuous feedback loops so every interaction improves the model.

- Build flexible guardrails that combine human judgment with automation.

This is what OpenAI modeled at DevDay. The company did not just showcase tools; it demonstrated how organizations can evolve with them. When AI becomes part of how people learn from one another, share knowledge, and make better decisions, the line between human and machine collaboration disappears. For organizations ready to move from pilots to performance, Lead with AI partners with enterprises to align leadership, activate skills, and turn adoption into measurable results.

.png)