Companies are celebrating AI usage while quietly paying a downstream rework tax. The fix isn’t “more AI,” it’s better system design.

Last week, a viral HBR article inspired by BetterUp and Stanford's Media Lab research made the rounds in our executive community.

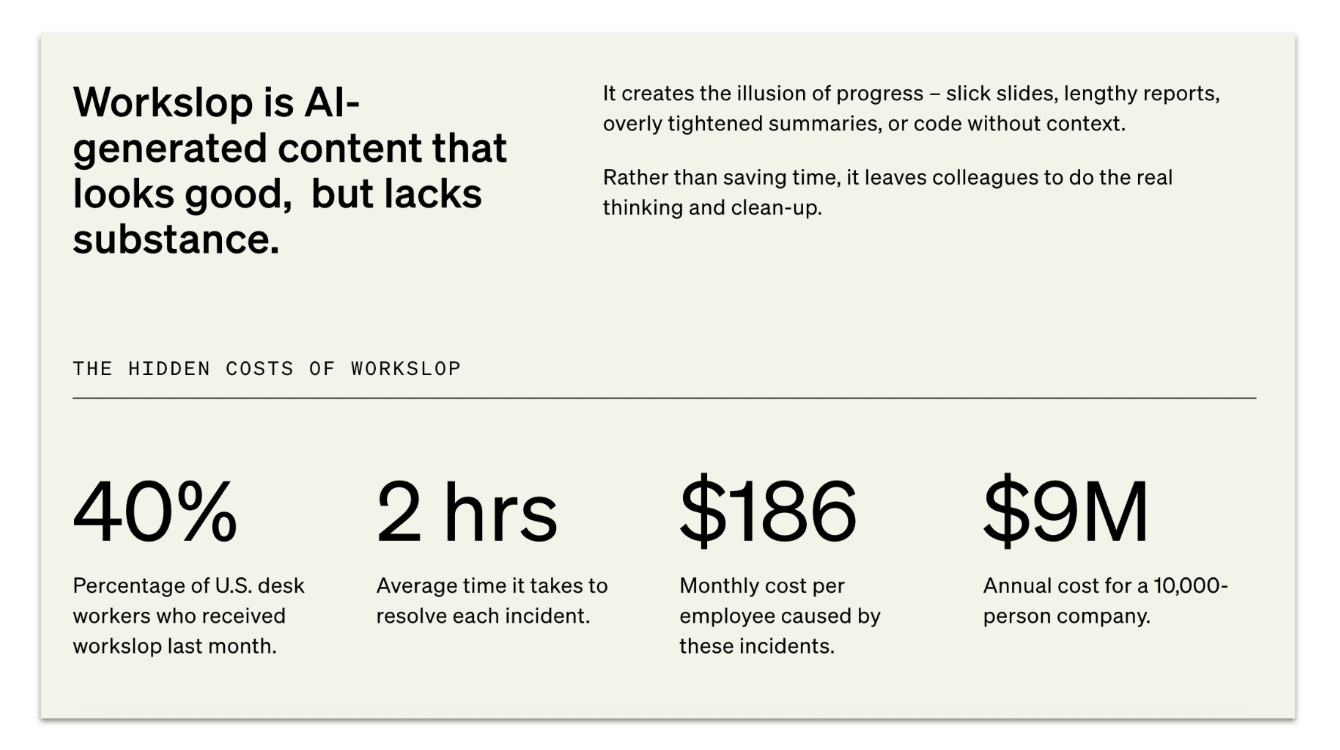

According to the research, businesses are slowing down due to Workslop: AI-generated business content that looks polished but moves nothing forward, and shifts effort to the receiver.

And the costs are real, say the researchers:

- 40% of U.S. desk workers received workslop last month.

- It takes about 2 hours to resolve each incident.

- That adds up to $186 per employee per month in hidden cost.

- For a 10,000-person company, that’s $8.9M per year in lost productivity.

And even though vendors are pushing a narrative of ‘vibe working,’ like in Microsoft’s latest Copilot commercial as shared by Boot Camp graduate Sacha Connor:

This “workslop” presents a 'rework tax' that slows down the organization.

Beyond just losing time, we're also losing trust. After receiving workslop, colleagues are rated less on key traits:

- Creativity: up to 54% saw the sender as less creative

- Trustworthiness: 42% saw the sender as less trustworthy

- Intelligence: 37% saw the sender as less intelligent

Capability and Reliability show similar declines, and 32% are less willing to work with the sender again.

Now, let's first acknowledge that while there may be truth to these numbers, this is, first and foremost, content marketing for BetterUp.

But that's not to say that the phenomenon doesn't exist.

It just happens to be something people have been doing long before AI, and will continue to do so long after. As BCG partner Debbie Lovich wrote:

“GenAI isn’t the problem - it’s how we’re asking people to use it.”

And, Brice Challamel, Moderna's Head of AI, noted:

"There is a special place in purgatory for people who burden their colleagues with useless emails and empty lingo documents. And I've seen those around for more than 3 decades now..."

Even the Microsoft commercial above has an eerily similar predecessor from 1990:

Workslop: the reality

Most of us don’t work to check boxes or hit shallow KPIs. We’re here to do meaningful work that creates a better future.

And AI can be a huge aid in increasing our "Impact Per Hour."

But AI usage doesn't equal value, and “abdicated thinking” AI activity can erode collaboration and increase rework.

We therefore need to measure and manage the downstream burden of AI.

“AI-first” rhetoric without workflow-level decisions creates a strategy–execution gap. The recent viral MIT paper arguing that 95% of AI pilots fail highlighted this.

As we did in the workshop mentioned above, AI should move existing KPIs across departments and strategic initiatives.

To do this, we need both large-scale company projects and individual AI mastery (join us tomorrow to go beyond the surface of AI.)

At Zapier, as CHRO Brandon Sammut told us in a Lead with AI PRO Masterclass, there are clearly defined levels of AI fluency, ranging from unacceptable to transformative.

In company-wide AI training, encourage experimentation with AI writing while distinguishing between drafts and decision-ready work, and making clear that the sender owns quality at handoff.

Beyond catching hallucinations, senders should review AI outputs through the lens of the downstream cost of workslop.

Like many AI initiatives, this is 90% culture and 10% technology. Would you forward a Wikipedia or HBR article repackaged as your own? Then don’t do it with AI-generated content.

This is how we avoid behaviors like the two examples Brian Elliott referenced in his newsletter on this topic:

- Managing up: A colleague who “very clearly puts any question into ChatGPT and pastes the response without reading it” is “loathed by everyone else on the team, but our boss loves him because he answers quickly — even though he’s often wrong and we have to clean it up.”

- Managing down: A CFO who takes marketing presentations, feeds campaign highlights into ChatGPT, and sends back “better ideas” — often lacking crucial context about target customers or market realities.

A Lesson to Include

For most of you who are at the leading edge of AI, hearing about workslop is a good reminder that not everyone is well-versed yet.

Share the article with your leaders and remind them to be mindful of team members who may be (inadvertently) shortcutting work with AI in ways that don’t benefit them or the company. (Without damaging the psychological safety needed to have a culture of experimentation!)

If you already have an AI training program, then here’s a short lesson to include:

1. Start with Tasks, not Tools.

- Know when to tap AI. Typically, this is when it accelerates idea generation, transformation (summarize/translate/rewrite), or validation (compare/checklists).

- Avoid AI as the source of record for new decisions or domains you’re unfamiliar with.

2. Label Drafts & Own the Handoff

- Every AI output is a draft until you, the creator, make it decision-ready. Never forward raw model output without edits.

- Red-team the output: check for hallucinations and read the content as the receiver, does it make sense? Does it raise further questions?

- Our cultural norm is that the sender owns the downstream effort. If the receiver needs to pay a ‘rework tax,’ the work isn’t done.

3. Lightweight Feedback Loop

- For non-routine outputs, track signals like the rework rate (when works get sent back) or ask for feedback on AI-assisted documents

Having this as a simple lesson drives good hygiene in when and how to use AI.

The Bottom Line: Impact Per Hour

No one needs more work.

Retain a culture where the quality of work delivered, whether by a human or human-AI hybrid, is high, as it’s created to make an impact.

Using AI should be a compliment, not an insult, but avoid the slippery slope of abdicating thinking. Prompt well, go back and forth with AI, and offload the part of writing that AI is better at, review, and send.

Move from AI as shortcut to AI as collaborator and reap the rewards, individually and as an organization.

See you next week,

- Daan