Delegation Engineering: Why Agentic AI Fails Without Managers

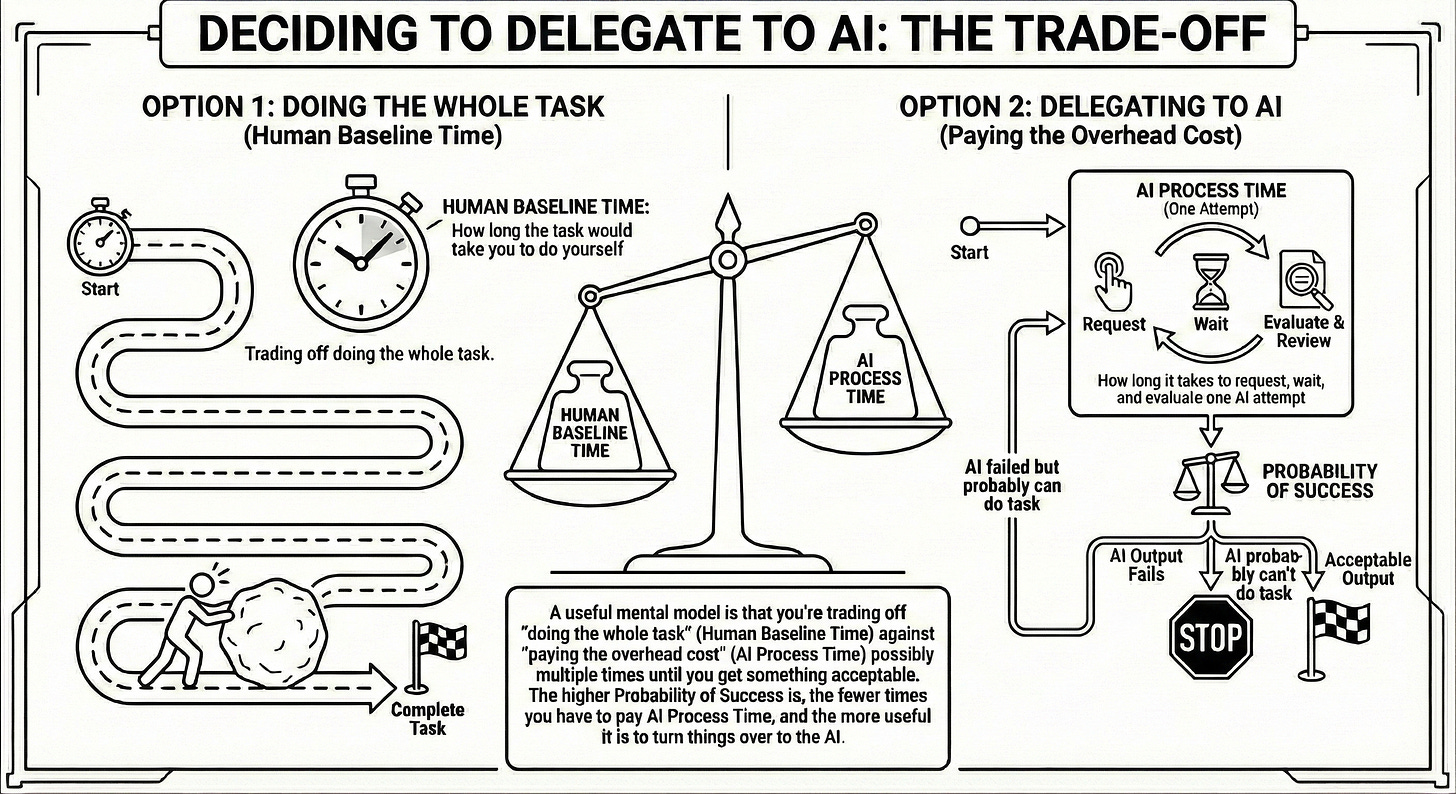

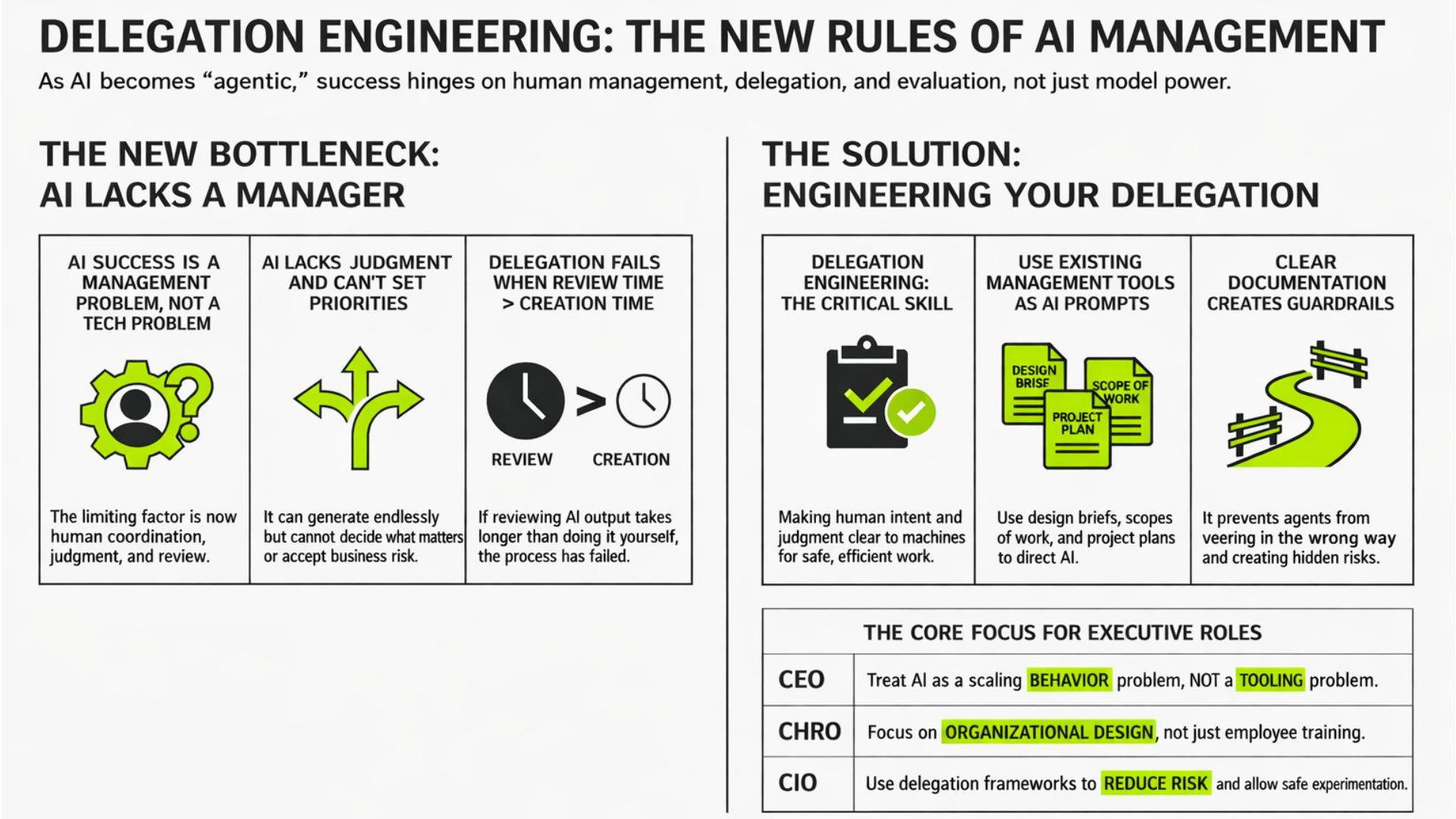

Ethan Mollick’s recent work makes one thing explicit: Once AI becomes agentic, the limiting factor is no longer the model.It is how humans delegate, supervise, and evaluate work.

In other words, AI success has quietly become a management problem.

In a recent experiment at the University of Pennsylvania, Mollick asked executive MBA students to build startups from scratch in four days. They used Claude Code and general AI models for research, positioning, pitching, and financial modeling. Most had never written code.

The results were striking. Teams shipped working prototypes, solid market analysis, and believable business stories. Mollick estimates they got an order of magnitude further than similar students working over a full semester.

The bottleneck was not AI capability. It was coordination, judgment, and review.

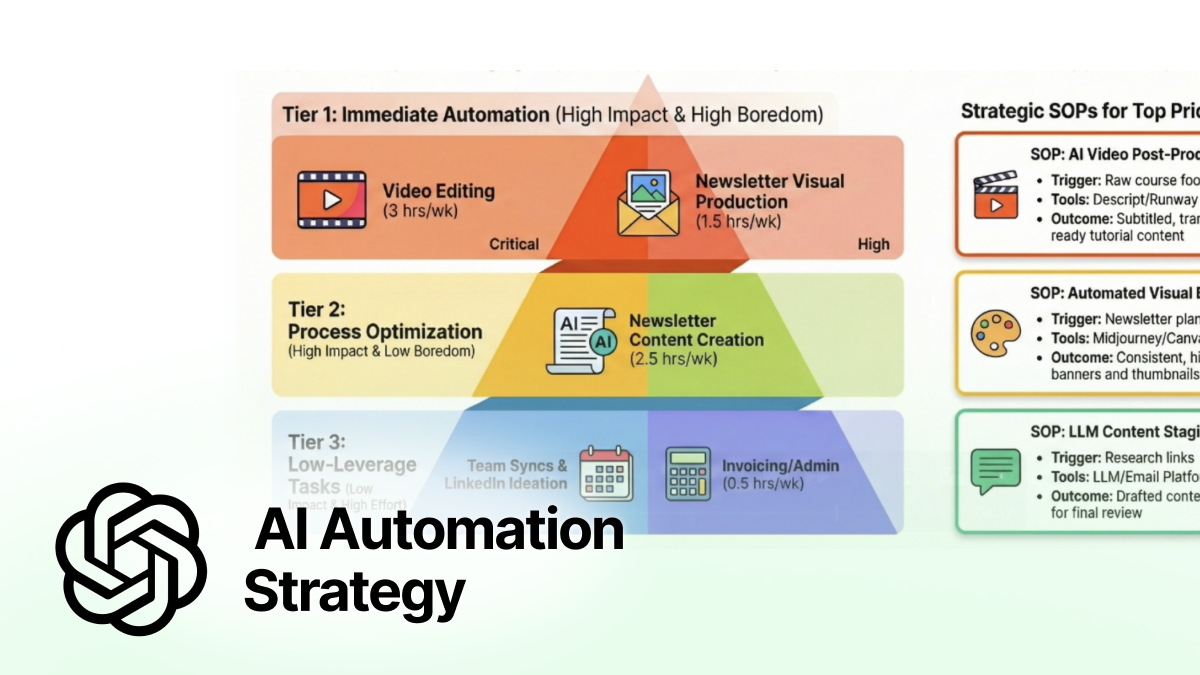

That lesson matters for leaders rolling out agents today. The value of AI no longer depends on how good the model is. It depends on how well work is delegated. The real competitive advantage is delegation engineering.

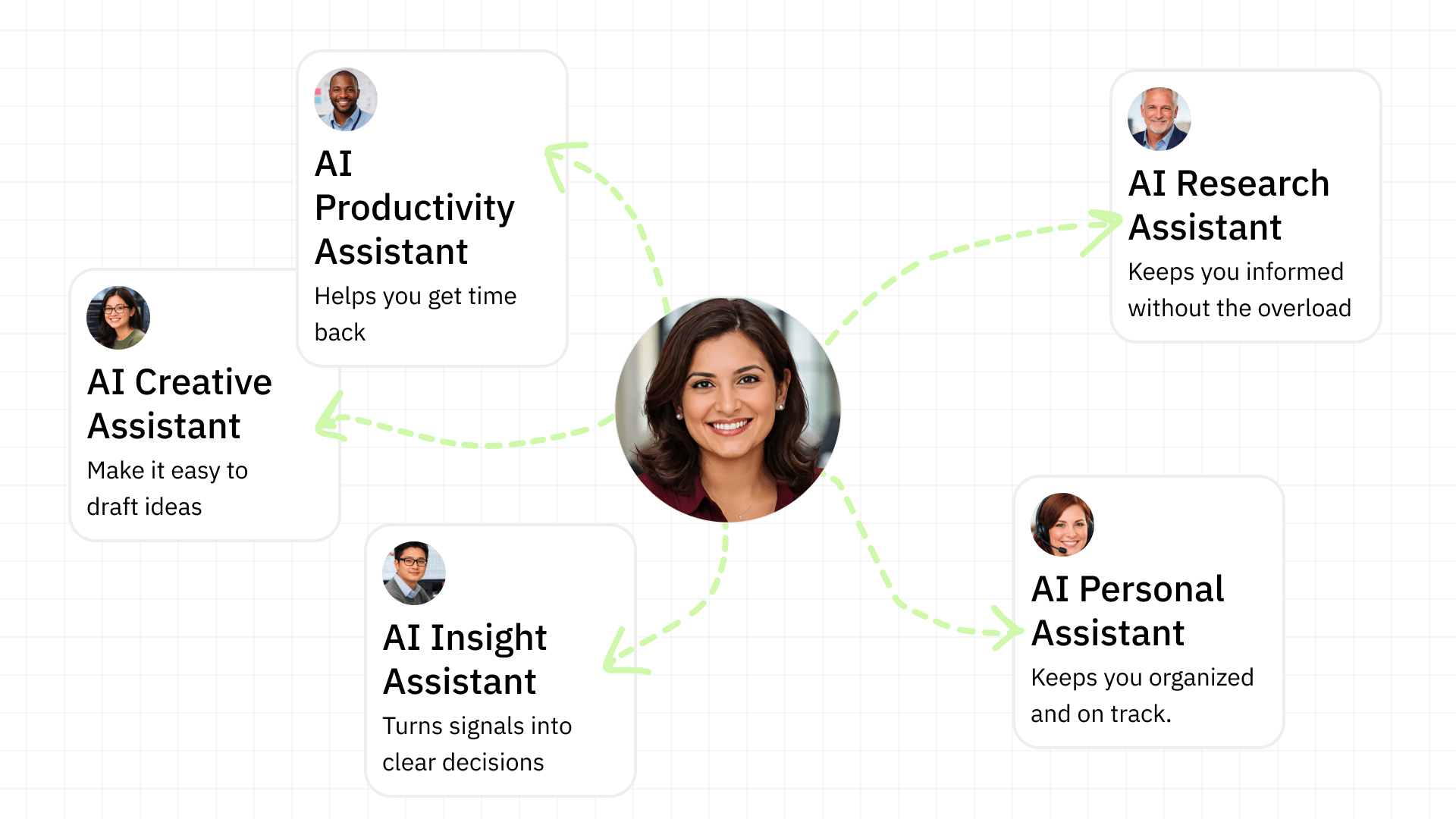

Delegation Engineering is the practice of leaders making human judgment legible to machines so work can be delegated safely, reviewed quickly, and improved continuously.

Agentic Gains Come From Management, Not Speed

AI is already fast. That is no longer the advantage.

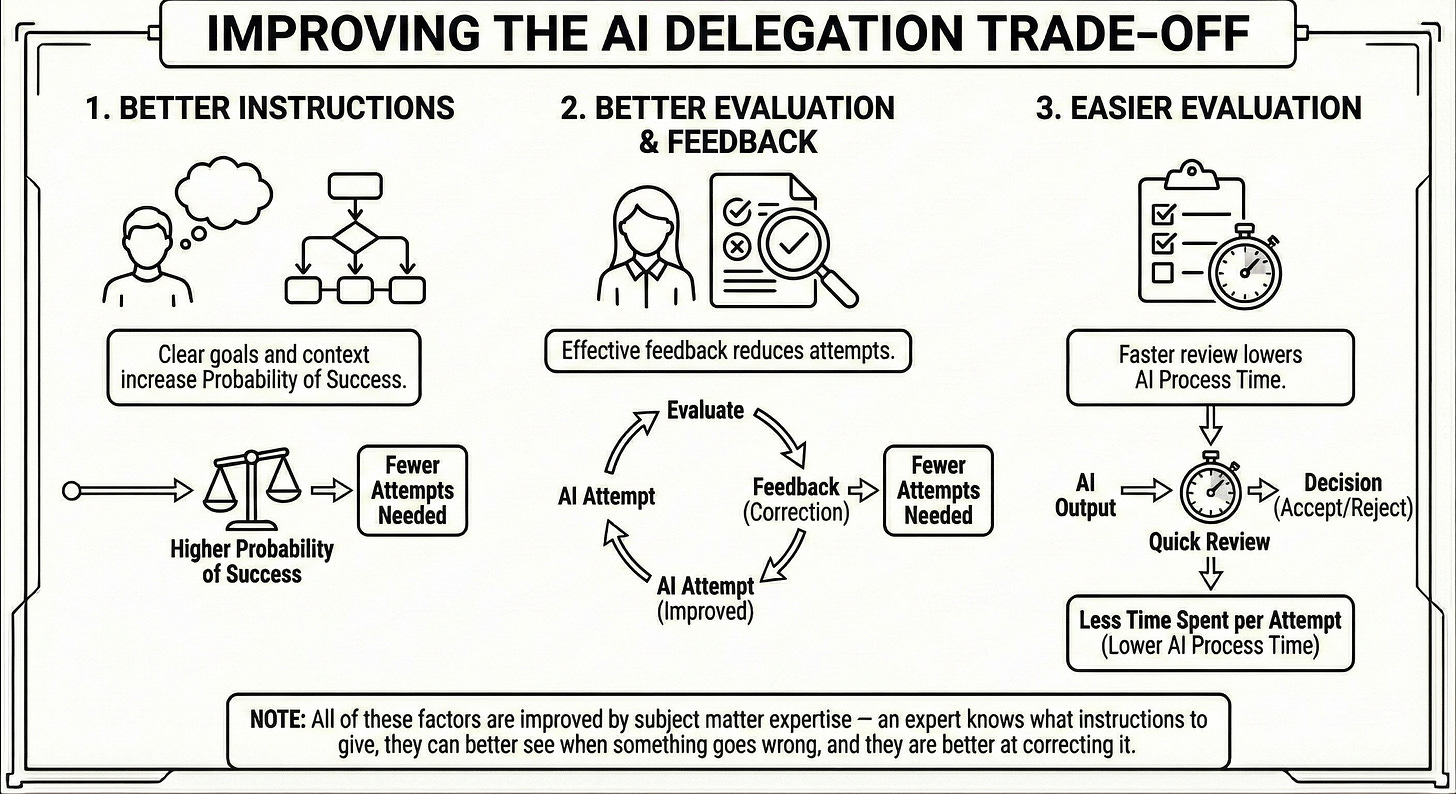

What limits results now is whether someone clearly defines what needs to be done, what “good” looks like, and when work is finished.

In Ethan’s experiment, teams ran multiple agents in parallel. Research agents. Coding agents. Writing agents. What made this work was not the tools. It was that someone decided priorities, resolved conflicts, and moved things forward without constant debate.

This is the shift leaders often miss. Agentic AI does not reduce the need for management. It raises the bar for it.

AI can generate endlessly. It cannot decide what matters. That decision still belongs to people.