How AI is Breaking Middle Management

Last week, I shared the three future-AI scenarios that terrify me, and a fourth, I deeply believe we need to fight for: the Co-Pilot economy.

In that fight, let's not forget about middle managers.

Because new data shows that companies like Amazon, McDonald’s, Uber, and Walmart, have replaced the work that trained managers to lead with algorithms.

Scheduling, performance tradeoffs, capacity decisions, and conflict resolution, the small, imperfect tasks where judgment was built, were automated.

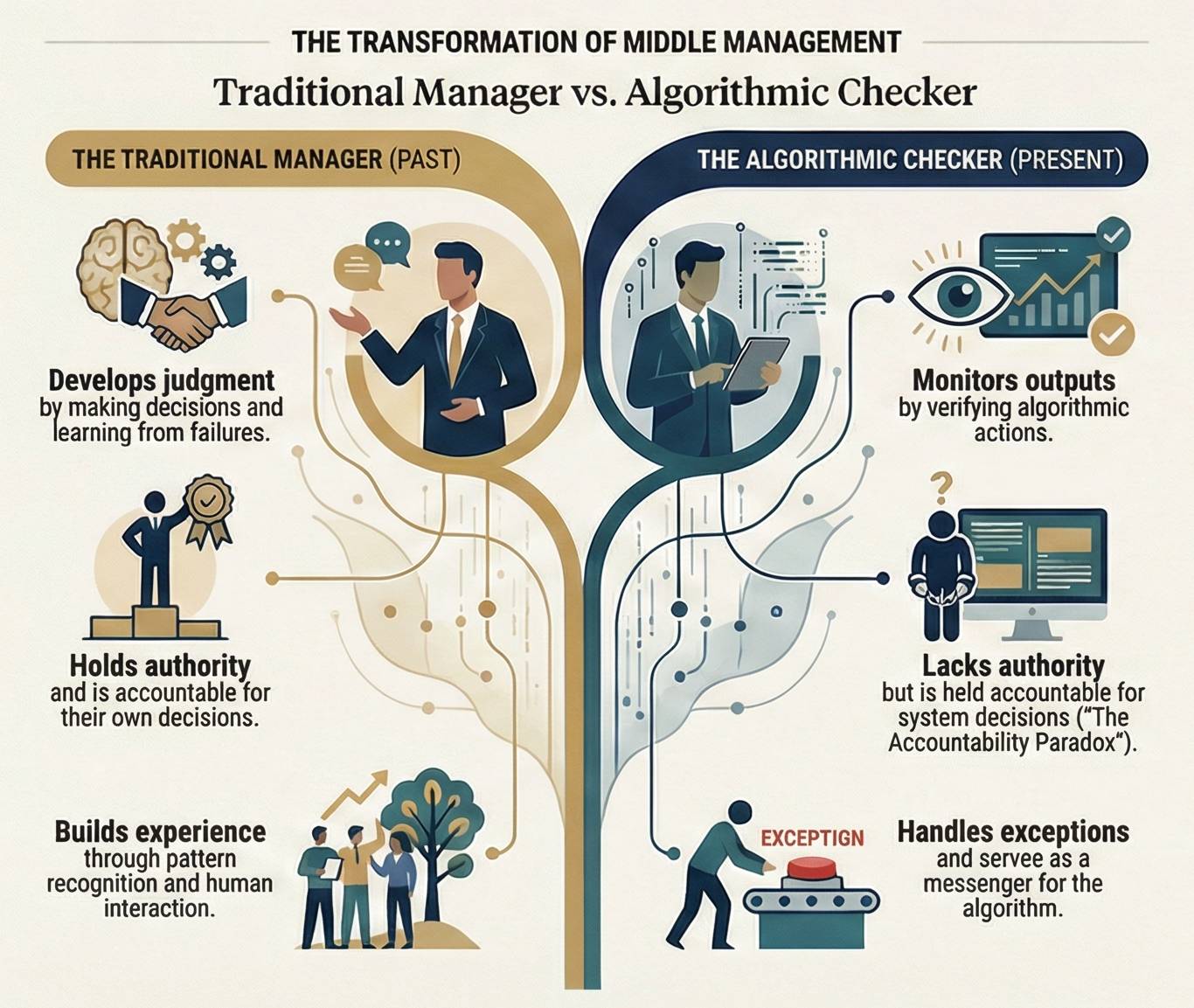

In the process, algorithmic management created checkers, not leaders, a judgment gap crisis in middle management, and long-term erosion of leadership.

As with the future AI scenarios, this early data is direction, not an inevitability.

Six months ago, Simon Sinek warned that AI cannot struggle for you. It cannot experience uncertainty, failure, or recovery, the very conditions through which humans learn judgment.

Leadership is forged in friction and repair, and when organizations automate away those moments, they are not accelerating growth; they are taking a shortcut around development. They save time, but erode the experiences that create capable leaders.

For the last decade, companies haven’t replaced managers with AI.They replaced the work that trained managers to lead.

Those decisions did not disappear by accident.They were automated in the name of efficiency.

A new study from Northeastern University’s Dr. Ravi Kalluri puts a name to the consequence: The Judgment Gap Crisis.

While companies automate decisions to boost efficiency, they are simultaneously removing the repeated judgment practice that produces future executives.The result is a growing class of managers who can monitor AI outputs, escalate exceptions, and explain results, but struggle to lead through ambiguity, complexity, or uncertainty.

Efficiency Gains Quietly Removed The Judgment Reps Managers Depended On

The numbers look impressive.

- McDonald’s rolled out its Orquest system and cut scheduling time from four hours to 30 minutes, an 87% reduction.

- Walmart deployed 45 AI agents to handle tasks that once consumed roughly 40% of a general manager’s time.

- Amazon’s Time Off Task system automatically terminates workers after two hours of inactivity with zero human override.

These are framed as productivity wins. And they are.

But what quietly disappeared with that efficiency was not busywork.It was repetition.

Those scheduling hours forced managers to absorb constraints, negotiate tradeoffs, anticipate friction, and recover when things went wrong. They were the thousands of low-stakes judgment reps where pattern recognition, situational awareness, and leadership intuition formed.

When algorithms absorb those decisions, managers do not fail immediately.They simply stop practicing judgment.

Over time, leadership becomes reactive.Pattern recognition dulls.Judgment confidence erodes.

The system improves. The human plateaus.

The Accountability Paradox Turns Managers Into Compliance Monitors

As automation expands, authority shifts faster than accountability.

This is the Accountability Paradox at the center of Kalluri’s study. Managers remain responsible for outcomes they no longer meaningfully shape.

- At Amazon, warehouse managers cannot adjust the algorithmically set quota of 420 boxes per hour, even after California legislators demonstrated they could not sustain that pace for more than three minutes.

- At Walmart, AI-driven scheduling reduced employee hours by roughly 20%, yet managers tasked with implementing those cuts cannot explain the logic or negotiate exceptions.

They carry the human consequences of decisions made by systems they cannot influence.

This breaks the learning loop that leadership depends on. Judgment develops when responsibility and authority are linked. When managers cannot trace outcomes back to their own decisions, feedback collapses. Learning stops.

Middle managers become compliance monitors, not decision makers.

This is how Player-Coaches get stuck in the Frozen Middle, watching burnout rise while lacking the authority to intervene.

Leadership Development Is Thinning Long Before Anyone Notices

The real cost of algorithmic management does not show up in quarterly metrics.It shows up later, when organizations look for leaders who can navigate ambiguity and discover they trained very few.

Kalluri’s study warns that in 10 to 15 years, companies will urgently need executives who can make judgment calls with incomplete information under novel conditions. At the same time, they are systematically eliminating the developmental experiences that create that capability.

AI did not remove managers.It removed the practice that turns managers into executives.

Organizations celebrating today’s efficiency gains are not measuring what they are losing: accumulated judgment, contextual adaptation, recovered mistakes, and confidence earned through real decisions.

That loss compounds quietly until complexity arrives and leadership depth is no longer there.

The Bottom Line: What Executives Must Do Now

Preventing the Judgment Gap does not require slowing AI adoption. It requires designing leadership on purpose. These five moves protect middle management capability while still capturing AI’s benefits, and here is what Lead With AI suggests:

- Design for judgment, not just efficiency: When automating workflows, identify which decisions disappear and whether they once trained managers to think, adapt, and lead. Efficiency that removes judgment weakens the leadership pipeline.

- Keep authority aligned with accountability: Managers must retain discretion over outcomes they are responsible for. AI should inform decisions, not sever the link between responsibility and influence.

- Measure leadership capacity, not only output: Track how often managers make tradeoffs, handle exceptions, and explain reasoning. Fewer decisions and more escalations signal quiet capability loss.

- Create deliberate exposure to ambiguity: Rotate high-potential managers into cross-functional, uncertain decisions where AI assists but does not decide. That friction is executive training, not inefficiency.

- Reward agency over compliance: Publicly reinforce moments where managers challenge AI outputs, surface risks, or slow decisions to protect people and outcomes. Culture follows what leaders praise.

The future executive bench will not be built by faster systems alone.It will be built by leaders who learned when to use AI and when to think for themselves.

Your job is to make sure the next generation still gets that chance.

.png)