OpenAI: AI at Work Is Expanding Human Capability

For the past two years, enterprise AI has largely been framed as a productivity story. How much time can be saved. How many tasks can be automated. How quickly costs can be reduced.

The new State of Enterprise AI report from OpenAI shows why that framing is now incomplete.

Based on aggregated enterprise usage data and a survey of 9,000 workers, the report captures what is actually happening inside organizations as AI moves from experimentation into daily work. Weekly enterprise usage has grown 8× year over year. Reasoning token consumption per organization is up 320×. Custom GPTs and workflow projects now account for roughly 1 in 5 enterprise messages.

OpenAI frames this moment as the shift from experimentation to scaled use cases. What the data ultimately reveals is something more fundamental: a rapid expansion of what people inside organizations are capable of doing.

But the most important signal in the data is not speed. It is scope.

According to OpenAI’s deployment and adoption teams, 75% of enterprise workers say AI enables them to complete tasks they previously could not perform. These include activities that once sat firmly outside many roles, such as programming support, code review, spreadsheet automation, technical troubleshooting, and building internal tools.

This is not incremental productivity. It is a shift in what roles are capable of doing.

Four forces define this capability-first phase of enterprise AI.

1. AI is Breaking Role Boundaries Across the Enterprise

The OpenAI data shows that AI is no longer confined to technical specialists.

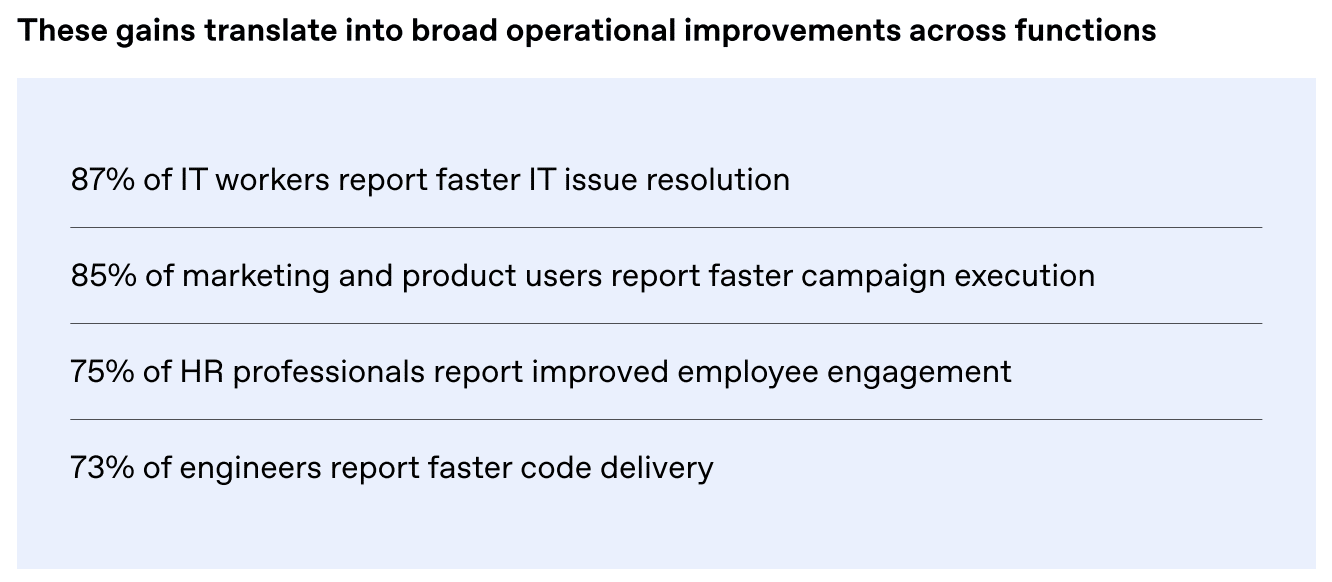

Coding-related messages from non-technical roles are up 36% in just six months. HR teams report improved employee engagement. Marketing and product teams report faster campaign execution. IT teams resolve issues more quickly. Engineers ship code faster.

This breadth matters. It shows that AI is expanding horizontally across the organization, not just vertically within a few expert teams.

When people can perform tasks they previously depended on other functions for, work moves closer to the source of judgment. Bottlenecks ease. Decision cycles shorten. Organizations become less dependent on handoffs and queues.

Capability expansion changes the shape of work itself. Capacity gains follow, but only because roles have first stretched to include new skills.

This is why focusing only on time savings misses the point. If roles do not expand, organizations simply do the same work faster. If roles expand, organizations change how work gets done.

2. Depth of Capability, not Frequency of Use, Determines Impact

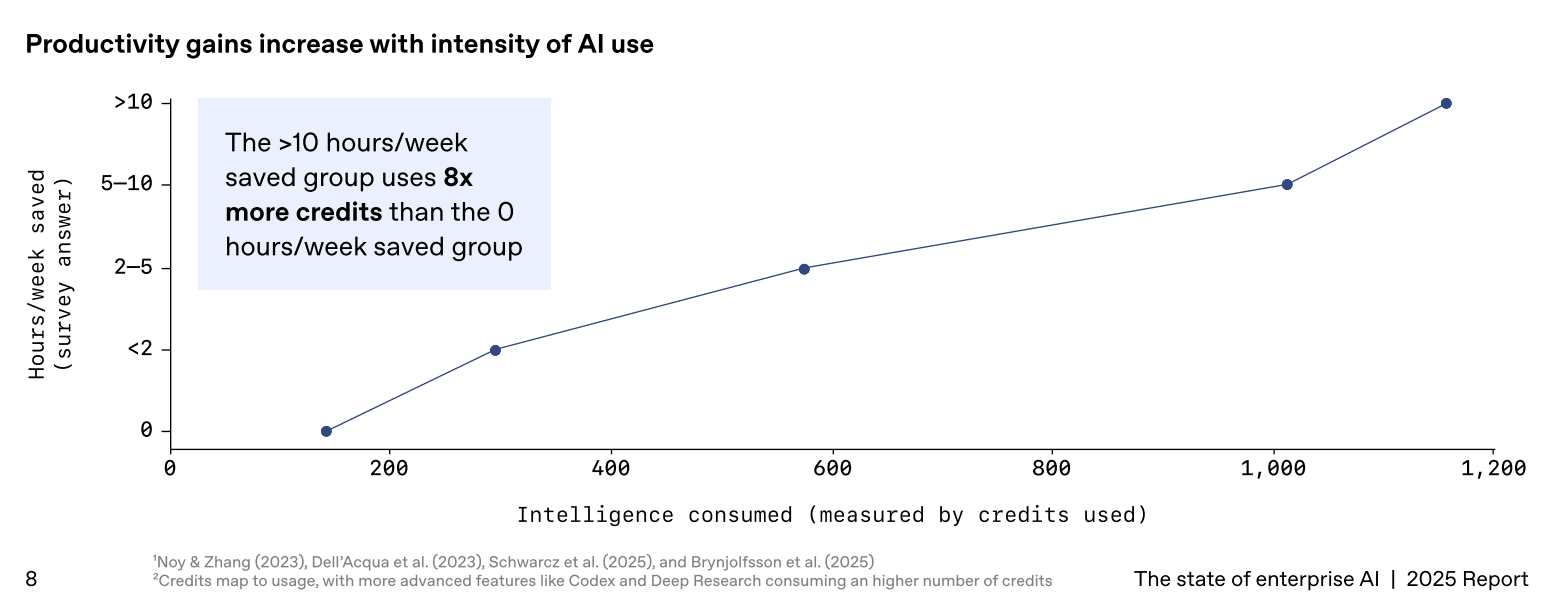

The report also makes clear that not all AI use is equal.

Workers who report saving more than 10 hours per week are not just using AI more often. They are using it more deeply. This group consumes 8× more advanced AI capabilities than those who report no time savings. They engage across a wider range of tasks, from analysis and coding to workflow automation and synthesis.

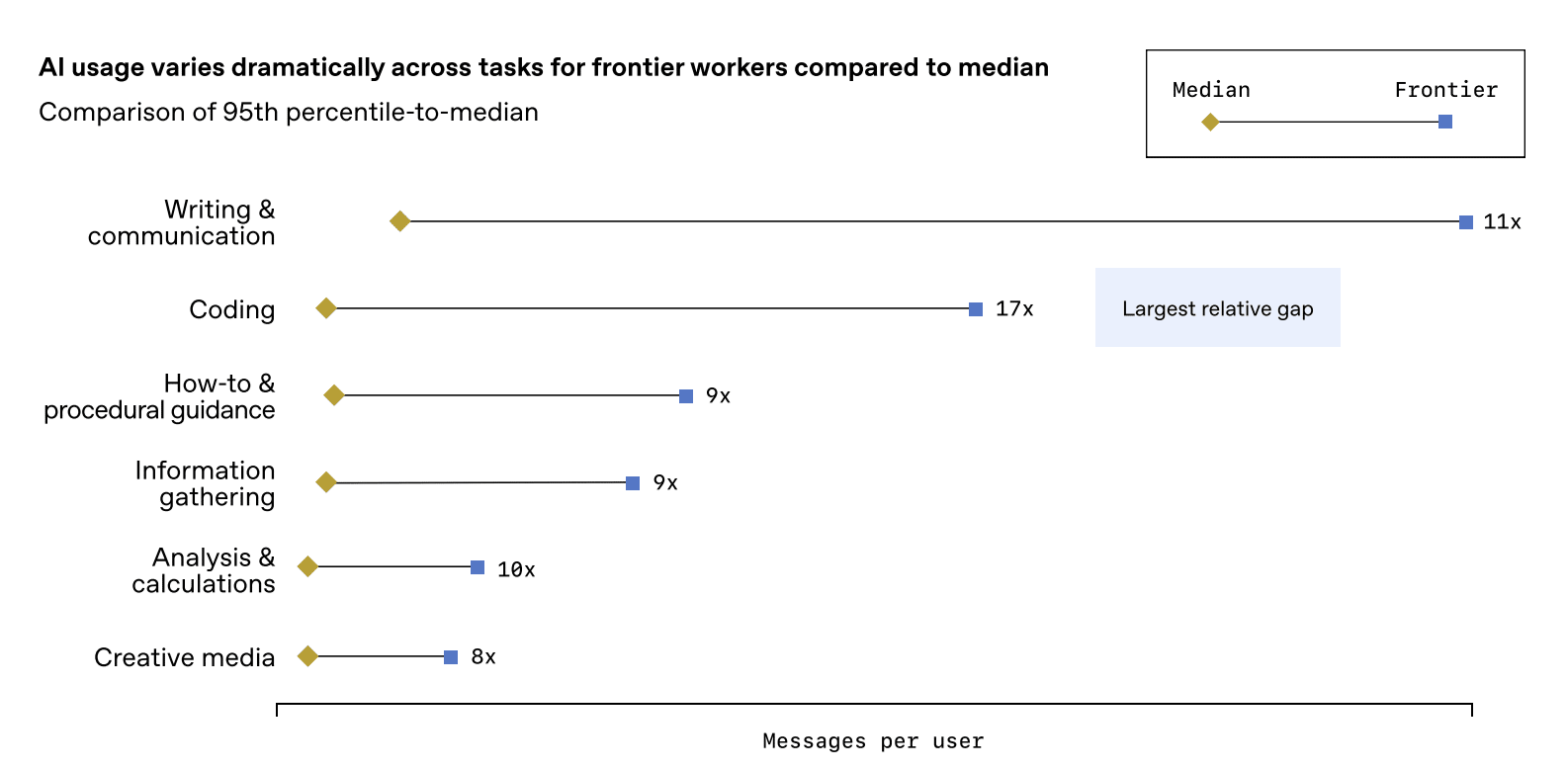

The same pattern appears at the firm level. Frontier organizations generate roughly 2× more AI messages per seat than the median enterprise and 7× more messages to advanced GPT workflows. These firms are not experimenting in pockets. They are standardizing capability.

Custom GPTs and Projects play a central role here. By embedding instructions, knowledge, and actions into shared tools, organizations make expanded capabilities repeatable and transferable. What one team learns does not stay local. It becomes infrastructure.

Yet many organizations are still leaving capability on the table. A meaningful share of active enterprise users have never used data analysis or reasoning features at all. This is not a technology constraint. It is a learning and enablement gap.

As behavioral scientist Stefano Puntoni has noted, productivity gains are real, but they are only the starting point. The deeper shift lies in how skills, roles, and expectations evolve once AI becomes part of everyday work.

3. Leadership is Shifting from Efficiency to Enablement

As AI absorbs predictable and repeatable tasks, the nature of leadership changes.

Managers spend less time coordinating execution and more time shaping it. Judgment, sensemaking, workflow design, and coaching move to the foreground.

OpenAI notes that the primary constraint for organizations is no longer model performance or tooling, but organizational readiness. In practice, readiness now means capability: skills, confidence, and the ability to redesign work at scale.

The most advanced organizations treat AI enablement as infrastructure. They invest in training, governance, and change management not to promote adoption, but to expand capability safely and consistently.

Leaders do not need to be technologists. But they do need AI fluency and Workflow literacy. Leaders who use AI in their own work model curiosity, reduce fear, and help teams move from assistance to autonomy.

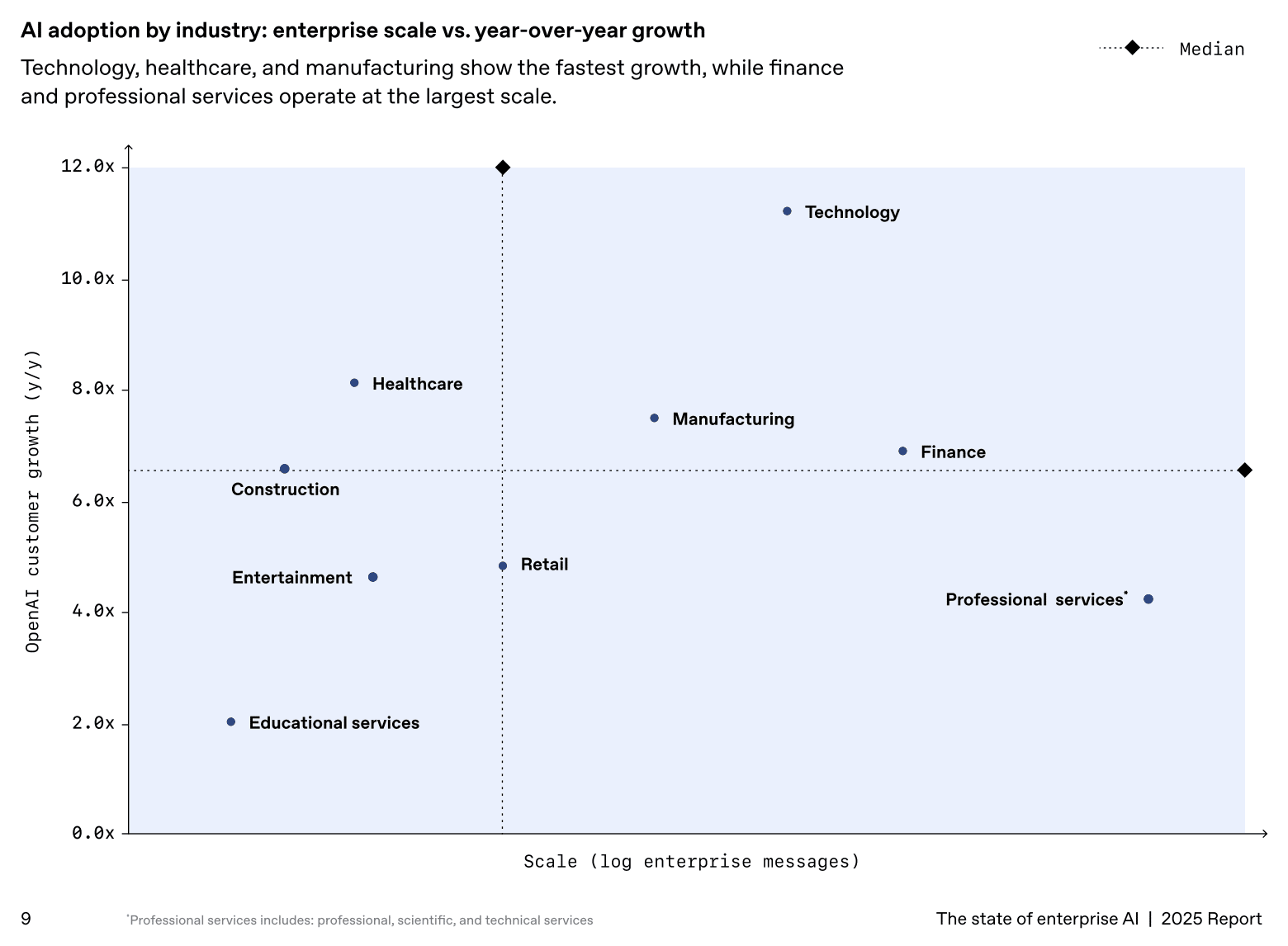

This shift is global. International enterprise AI usage has grown more than 70% in six months. Australia, Brazil, the Netherlands, and France are among the fastest growing markets. Healthcare and manufacturing are now some of the fastest adopting sectors.

4. The Gap Between AI Leaders and Laggards is Widening

Leading firms are not just using AI more frequently, but are also achieving significantly deeper organizational integration and workflow standardization, treating AI as a core organizational capability rather than simply a productivity tool.

This depth of integration is directly correlated with organizational strength and financial outcomes.

BCG research shows that firms identified as AI leaders achieved significant financial outperformance over three years, including 1.7x revenue growth, 3.6x greater total shareholder return, and 1.6x EBIT margin.

And as I've mentioned countless times, this isn't a technology story: the primary constraint to scaling AI isn't the models or tools, but organizational readiness.

The Bottom Line: Build Capability First, Capacity Follows

Across organizations making real progress, the same behaviors appear.

- Design for role expansion: Start by defining what new tasks each role should be capable of performing with AI.

- Embed capability into workflows: Use shared assistants and tools so skills scale beyond individual power users.

- Make training a core infrastructure: Role-based, in-workflow learning turns potential capability into practiced capability.

- Measure depth, not activity: Track task diversity and advanced feature use, not just logins or prompts.

- Lead by using: Capability spreads fastest when leaders model it in their own work.

This is exactly the problem Lead with AI’s Enterprise AI Enablement program is designed to solve.

We help organizations move from isolated AI usage to broad capability expansion. Employees learn how to use AI to perform new kinds of work through mobile-first, in-workflow learning, aligned to their actual roles. Sales, HR, Operations, Finance, and Marketing follow role-specific tracks that focus on the tasks they can now own with AI. Capacity gains matter, but they follow capability expansion. Organizations only move faster after roles learn to do more.