How HubSpot Made AI Adoption Boring

Most AI transformations fail for a simple reason. They ask people to do extra work.

More training.

More experimentation.

More side projects layered onto already full calendars.

At HubSpot, AI adoption scaled because leadership made one deliberate choice that ran counter to the hype.

They made AI boring by design.

Not boring as in unimportant.

Boring as in expected, routine, and part of the job.

Instead of treating AI as something special, HubSpot embedded it into the same places where work had always been discussed: onboarding, performance conversations, customer workflows, and engineering practices. Over time, AI stopped feeling like an initiative and started feeling like part of the job. Once that happened, resistance faded.

What stood out to me is that adoption did not begin with transformation or moving people up fluency levels. It began when leaders made one part of the job boring by embedding AI into existing routines until usage became automatic.

That reframes the core message. Instead of chasing what’s new in AI, the more useful shift is to pick one workflow, apply AI inside it, and repeat through test-and-learn until it stops feeling novel and starts feeling like process.

That lesson comes from a recent Managing the Future of Work conversation with HubSpot’s Helen Russell and Jon Dick.

What HubSpot Did Differently

1. AI fluency became a baseline expectation

To avoid employees feeling “I’m behind,” HubSpot made AI use part of doing the job without requiring uniform expertise.

Leadership modeled their own AI usage in plain language. AI showed up directly in people processes: onboarding with an AI buddy, interview note-taking, feedback synthesis, and coaching conversations. Participation became normal, not performative.

This mattered because many leaders quietly worry about exposing uncertainty. HubSpot removed that pressure by making visible participation the expectation.

Leaders did not need to sound expert. They needed to participate visibly. When expectations are clear, learning becomes public rather than defensive.

It shows in the data:

- 98% of employees use AI on the job

- 85% are weekly active users

- 93% report urgency within their teams to adopt AI

2. Tools reduced effort before expectations increased

To solve inconsistent usage and tool overwhelm, HubSpot focused on relief first.

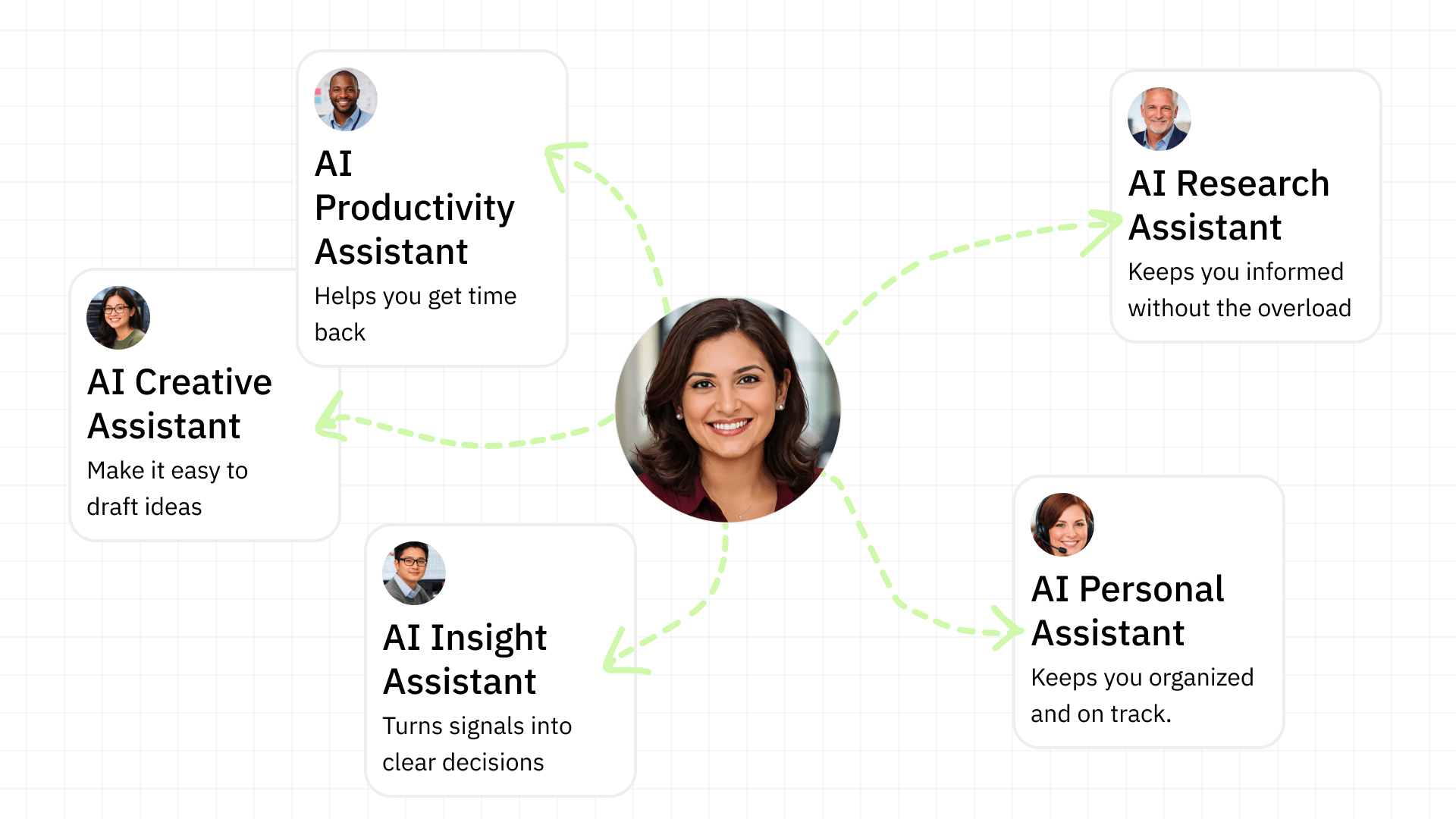

Customer Success teams used internal AI assistants for meeting prep and follow-ups. Engineers used AI inside standard development workflows. HR reduced friction in hiring, onboarding, and feedback.

Choices were simplified. Time was saved.

Only after teams consistently felt relief did leadership raise expectations around fluency and consistency. By then, AI already felt useful and familiar.

Engineering productivity increased 46%, with no increase in incident rates.

3. Norms and trust did the heavy lifting

To escape the pilot trap and trust anxiety, AI was framed as support for human work, not an efficiency mandate.

Work was examined at the task level to identify where AI could extend output. Peer examples spread usage through short demos and shared workflows. Skepticism was discussed openly. Guardrails around data, quality, and privacy stayed visible.

AI became socially safe and operationally trusted.

What “Making AI Boring” Looked Like in Engineering

HubSpot’s engineering teams showed what boring looks like in practice.

They began in mid-2023 with cautious pilots using GitHub Copilot, supported by founder-level sponsorship. Entire teams participated so learning happened collectively. Enablement came early through shared channels, training, and clear guardrails.

Impact was measured using metrics leaders already trusted: velocity, cycle time, and incident rates. Early gains justified continued investment rather than hype-driven scale.

As tools improved and experience grew, gains compounded. More than 90% of engineers now use AI weekly. 95% of code changes include AI assistance. Productivity increased 46%, with no correlation to higher production incidents.

Once quality concerns eased, access became default. Only after adoption crossed 90% did AI fluency become a hiring expectation.

By then, AI no longer felt new.

It felt expected.

That was the goal.

The Bottom Line: Choose the Level You Act On

What HubSpot actually put in place at each level. You do not need to copy everything. Borrow what fits your context.

Level 1: Foundational

- Embedded AI into onboarding through an AI buddy

- Used AI for interview notes, feedback, and admin prep

- Set clear data and privacy guardrails

Level 2: Functional

- Rolled out internal AI assistants for Customer Success

- Integrated AI into standard engineering workflows

- Reduced tool sprawl by setting defaults

- Embedded AI into HR workflows

Level 3: Advanced

- Broke work into tasks to identify default AI use

- Formed AI pods combining functional experts and engineers

- Built reusable assistants and shared workflows

- Normalized peer sharing through Slack and demos

Level 4: Transformative

- Treated AI as infrastructure

- Standardized tools, governance, and metrics

- Measured impact via productivity and outcomes

- Made AI fluency a hiring expectation once adoption was proven

The lesson is simple but uncomfortable:

AI adoption does not start with transformation.

It starts when one part of the job becomes boring enough to repeat.

And once that happens, everything else follows.