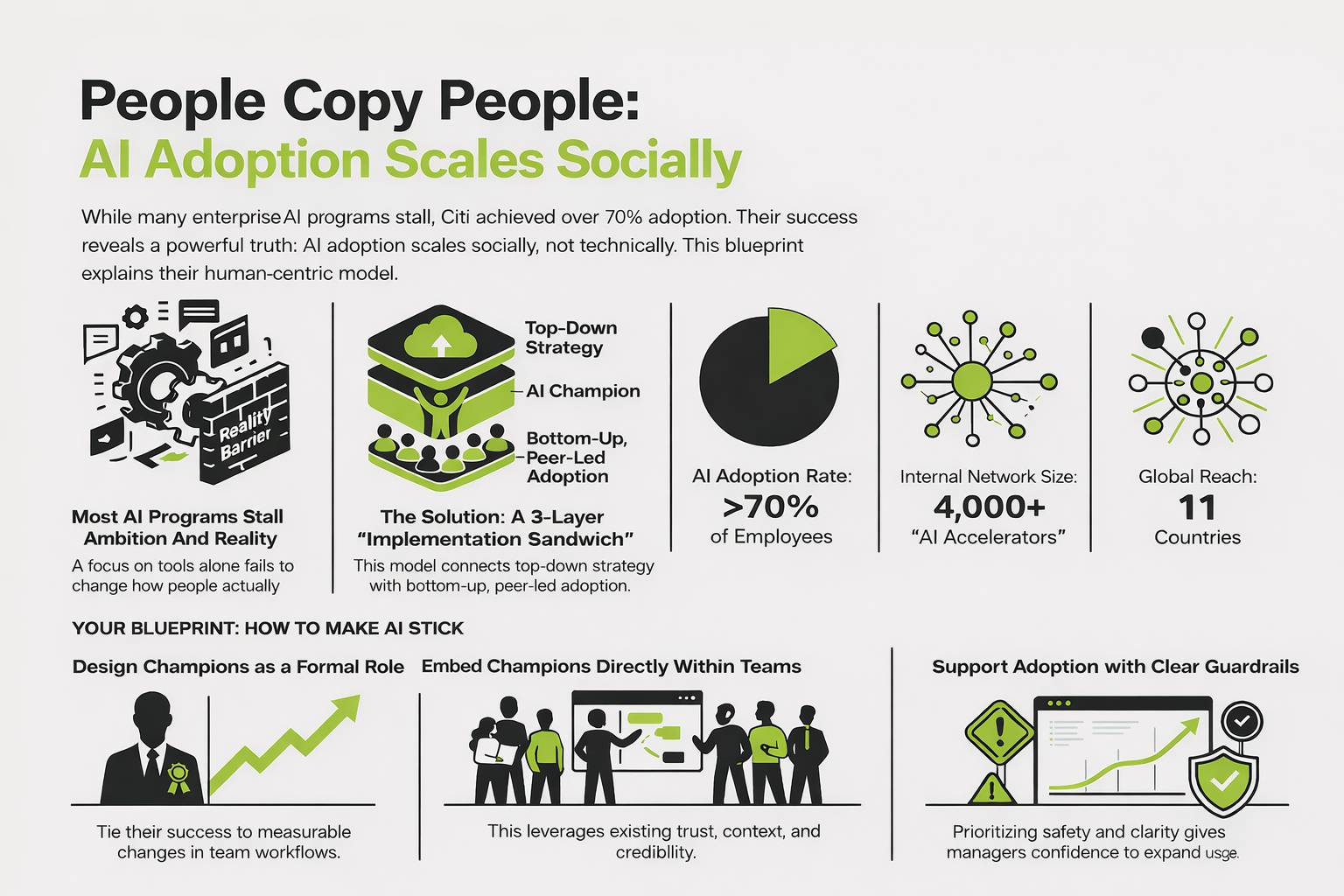

People Copy People: AI Adoption Scales Socially

When people talk about AI adoption at scale, the conversation usually starts with tools. New platforms. New copilots. New roadmaps. At Citi, the story starts somewhere quieter and far more effective: people.

Over the past two years, Citi has built an internal network of more than 4,000 AI Accelerators, supported by a smaller group of 25–30 AI Champions, reaching over 70% adoption of firm-approved AI tools across a workforce of 182,000 employees in 84 countries. The numbers were first reported by Business Insider, and they matter because they cut against a familiar enterprise pattern. Instead of central pilots stalling at the edges, Citi embedded AI into everyday work through peers.

This is not a story about experimentation. It is a story about how AI spreads inside large organizations. The most interesting part is not the tools themselves, but the structure behind them.

I have seen this pattern before in early AI Champions programs we helped design long before the current hype cycle. What works consistently is a three-layer implementation sandwich.

Strategic direction and guardrails at the top. Real experimentation inside teams at the bottom. And in between, AI Champions as connective tissue. In practice, Champions succeed because they act as workflow translators, helping teams understand where AI fits, where it adds value, and how it reshapes decisions, handoffs, and accountability.

That is why our AI fluency matrix focuses on upgrading Champions into operators, coaches, and internal reference points, rather than leaving them at the level of early adopters.

What Citi’s AI Champions Actually Do (And How Much Time It Takes)

One of the most overlooked details in Citi’s AI adoption story is that AI Champions are not full-time roles. They are embedded operators, contributing alongside their day jobs.

In practice, Champions typically spend 30–60 minutes per week on the role.

That time is not spent teaching classes or pushing tools. It is spent inside real work.

AI Champions at Citi focus on a small set of repeatable behaviors:

- Showing AI in action inside live workflows, such as summarizing documents, drafting internal updates, analyzing datasets, or supporting development tasks

- Helping peers get unstuck, answering questions in context rather than delivering generic training

- Sharing concrete examples of what worked, why it worked, and how others can adapt it

- Surfacing friction and risks back to central teams, helping refine guardrails and approved use cases

This matters because Champions are not acting as instructors. They are acting as credible peers, translating AI from possibility into practice.

Their influence compounds because it travels socially. A single example shared in a team meeting or chat often leads to multiple reuses, adaptations, and follow-on improvements, without any central coordination.

The result is a system where learning stays lightweight, adoption stays voluntary, and usage stays grounded in real work, which is exactly why Citi reached over 70% employee adoption without mandating AI use or turning it into a performance requirement.